During a recent interview with Epicenter Bitcoin, Blockstream Co-Founder and President Adam Back discussed the various forms of centralization that have crept into Bitcoin. While most of the community is worried about the centralization of mining pools and overall hashing power more than anything else, Back took the time to explain the overlooked importance of having a large number of full nodes on the network. As the blockchain has grown over time, it has become more difficult to process and store every transaction on a local hard drive. Back’s main contention is that full nodes are needed as auditors on the network.

How Full Nodes Affect Mining Centralization

Although miners partially hold each other accountable, Back contends that full node auditors are needed to keep them honest. During the Epicenter Bitcoin interview, he noted:

“If we have a very high ratio of economic dependent auditors, we can tolerate a more centralized ratio of large miners and vice versa because miners fighting against each other — vying for block rewards — partly hold each other honest because the sort of policy of not building on top of incorrect blocks. That’s a consensus rule, right? But what makes that a consensus rule is partly the full node auditors are looking at that.”

Full Nodes are a Key Component of the Block Size Debate

Adam Back then began to talk about full nodes in terms of the block size limit debate. He explained that some proposals don’t seem to fully consider the increased costs of operating a full node that would come with a block size limit increase:

“I think I see in some of the more aggressive block [size limit] proposals articulation of less emphasis on the full node auditors. I’ve seen people be, potentially, quite content for full node auditors to, over time, be only possible in data centers and increasingly high-bandwidth, more expensive data centers.”

Bitcoin Creator Satoshi Nakamoto understood that every user would not be able to run their own full node once adoption reached a certain breaking point, and he claimed that there would never be more than 100,000 full nodes on the network back in 2010. Satoshi’s understanding of the potential cost of running a full node is what led him to include an outline for simplified payment verification (SPV) in the original white paper.

Although Back clearly explained the need to keep the cost of maintaining a full node low, he also noted that the development community should avoid moving to an extreme in the opposite direction:

“Now, of course, you can’t constrain the network so that somebody on dial-up or a GSM modem or something really low powered and with a Raspberry Pi or something [is able to run a full node] — that would be too constraining and might already run into troubles. But it is a balance and you do want to make it easy and not too onerous to run full node auditors because you want the majority of medium-sized power users and so on to run full nodes to assure themselves more robustly of the security of the system. And actually, the security of the system as a whole benefits from that. It’s not just something they do for themselves. They run it for their own security. The fact that they run it for their own security holds the system secure because they would reject payments that didn’t check out from their full node, and that information would flow back to the SPV users.”

Full Nodes as a Measurement of Decentralization

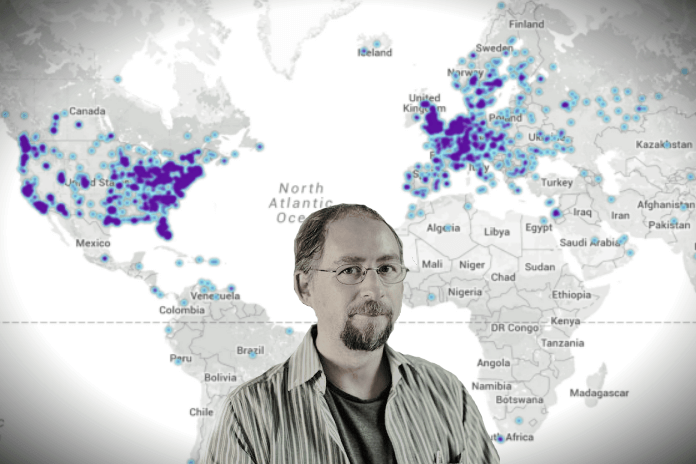

Another topic of conversation that has been somewhat popular in the Bitcoin community lately has been how decentralization can be measured in a peer-to-peer digital cash system. Truthcoin Creator Paul Sztorc has written a lengthy blog post on the idea that the cost of operating a full node could be used as a metric for decentralization, and Back seemed at least sympathetic to this idea during his Epicenter Bitcoin interview:

“If you boil it down going down from the requirements about what Bitcoin is and why decentralization and permissionless innovation [are important], you can translate that into what are the mechanisms that make bitcoin secure, and the full node auditors — it’s not just running a full-node, you have to actually use it for transactions. It’s the amount of economic interest that is relying on full-nodes and has direct trust and control of those full-nodes. This is what holds the system to a higher level.”

Back then described the sorts of technical issues that can make it more difficult to operate a full node and degrade decentralization:

“The things that degrade this are things like block sizes getting full, memory bottlenecks, CPU validation bottlenecks, and companies outsourcing running full-nodes to third-parties, or running their entire bitcoin business by API to a third-party. There are some tradeoffs here; the software is sort of technical to run, and some startups may not have the expertise to run any software at all. But I do think we need a high proportion of full-nodes.”

Although Back briefly discussed some of the work by Bitcoin Core Developer Gavin Andresen and Bitcoin Core Contributor Matt Corallo that has lowered the bandwidth, CPU, and other physical requirements of operating a full node, it’s clear that there is still plenty of more work to be completed in this area. Much of the Bitcoin scalability debate is focused on the block size limit right now, but the reality is that, as Peter Todd and others have noted, the work by Gregory Maxwell, Pieter Wuille, and other Bitcoin Core developers has been vital in allowing Bitcoin to scale to 1MB blocks. The Scaling Bitcoin workshop in Montreal this weekend should give everyone a clear view at the current options for bringing Bitcoin to millions of new users over the next few years.